GitHub - michaelnowotny/cocos: Numeric and scientific computing on GPUs for Python with a NumPy-like API

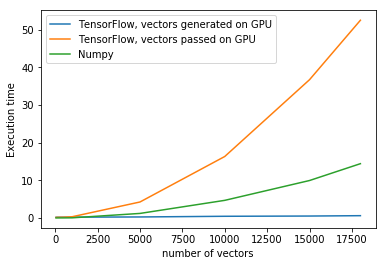

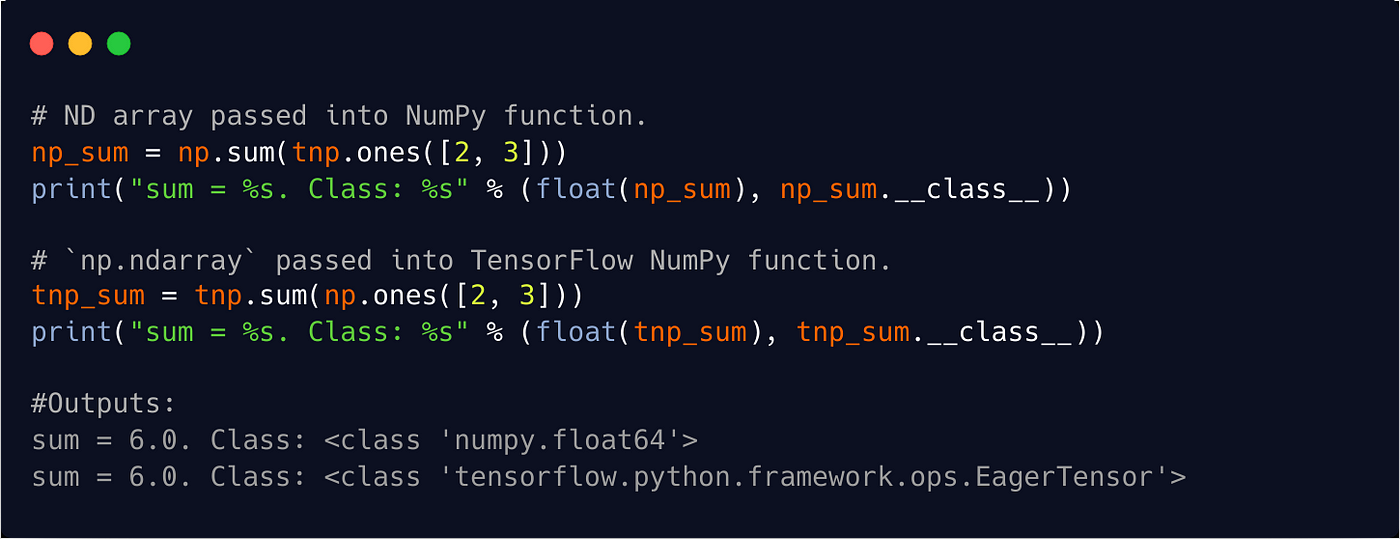

Numpy on GPU/TPU. Make your Numpy code to run 50x faster. | by Sambasivarao. K | Analytics Vidhya | Medium

python - cuPy error : Implicit conversion to a host NumPy array via __array__ is not allowed, - Stack Overflow

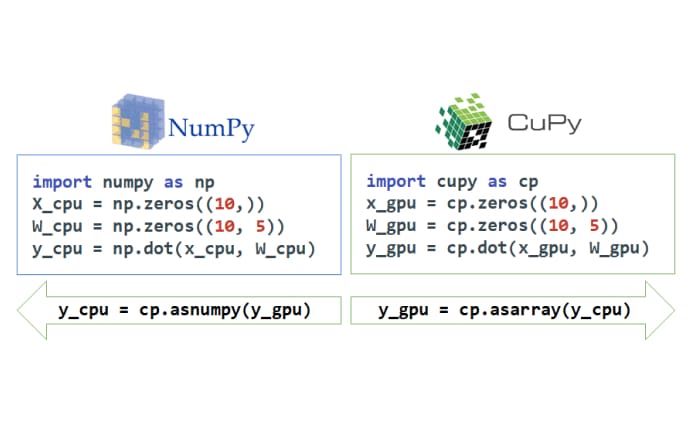

Numpy on GPU/TPU. Make your Numpy code to run 50x faster. | by Sambasivarao. K | Analytics Vidhya | Medium

GT Py : Accelerating NumPy programs on CPU&GPU w/ Minimal Programming Effort | SciPy 2016 | Chi Luk - YouTube

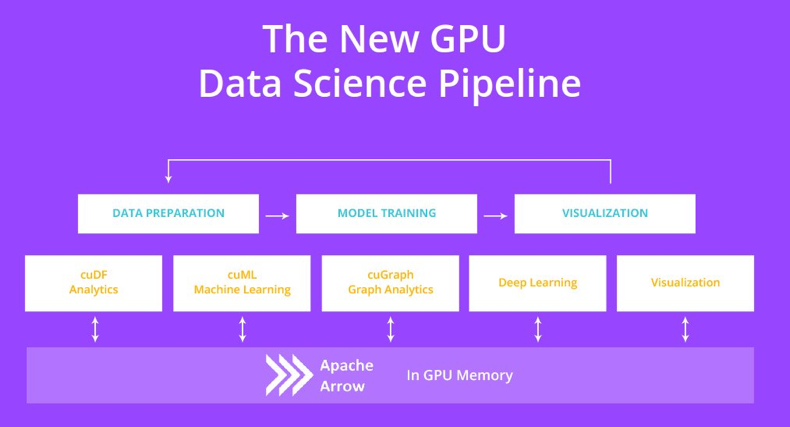

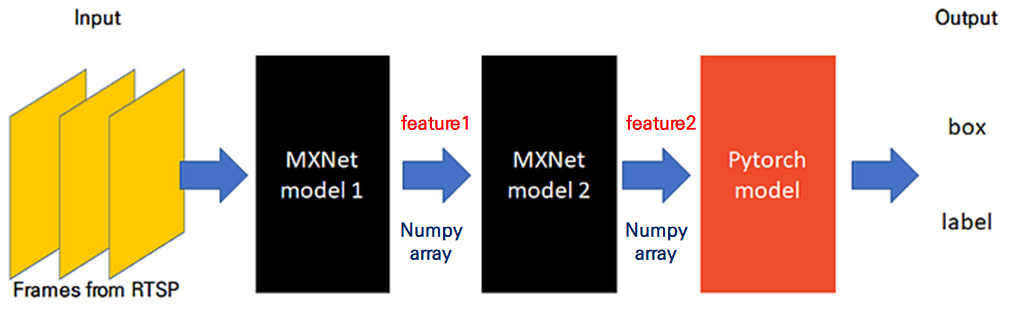

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science